How can we analyse approximately 70 years of content within the British Journal of psychiatry (BJP) amounting to a total of 51.479 pages? This pressing question extends to the French, Dutch, Belgian and German equivalent of this journal. I gathered a corpus of between 250.000 and 300.000 pages. Furthermore, the content inside these journals is very diverse and includes: articles, book reviews, conference notes, asylum reports, membership lists and obituaries. The sheer volume and diversity of the corpus poses a serious challenge. How can a historian find and trace relevant information and analyse the evolution of specific ideas throughout such a corpus?1

From the perspective of the computer scientist, humanities research also forms an interesting challenge. The sources that historians work with are often unstructured and irregular. The BJP corpus made for an interesting case-study due to the poor Optical Character Recognition (OCR) quality and lack of a regular volume structure or logical units. Boundaries between the elements of the journal were not easy to detect. The table of contents was often absent or difficult to parse reliably due to the PDF to TXT conversion and OCR errors. In working with this corpus from a historical as well as computer science perspective I started a project with Maria Biryukov, Roman Kalyakin and Lars Wieneke.

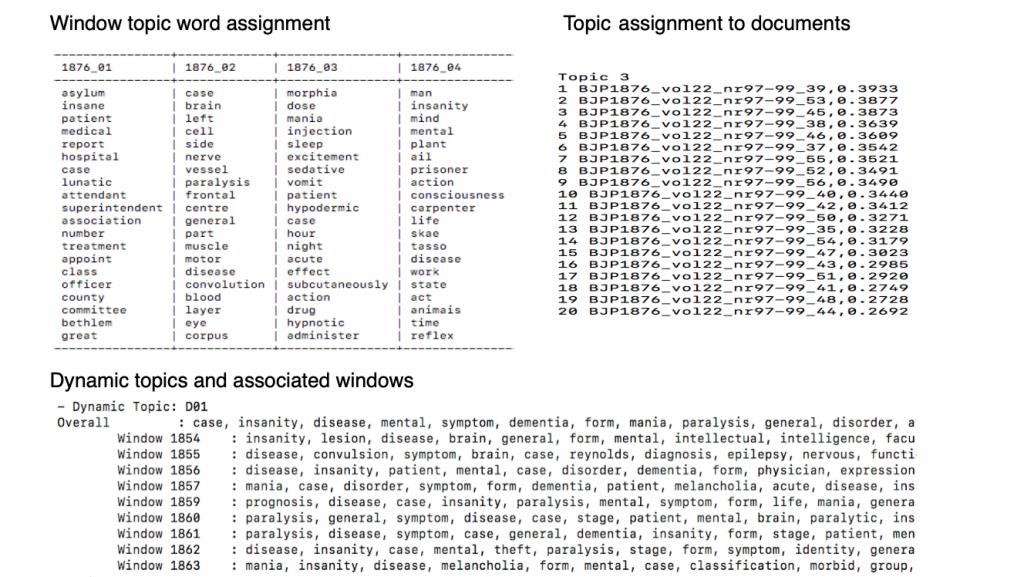

How can we select relevant segments of the corpus for historical analysis? Topic Modelling (TM)2 can discern topics within the corpus without requiring an annotated dataset, such as in the research of Shohreh Hadadan.3 Furthermore, TM enables a topic-driven selection of content for deeper analysis. In the project, we needed to learn which topics were present in the journal and to what extent; where text corresponds to various topics in the journal; and how to select relevant parts of the text for deeper investigation.

To illustrate how we can validate and use these topic models within historical research, I will explore the disease General Paralysis (GP), which was quite common in nineteenth century asylums. Some of the symptoms of this disease were problems with speech, and ataxia (involuntary coordination of muscle movement) which could lead to paralysis and death. Physicians and psychiatrists at the time had difficulties finding the cause of this disease. From the middle of the nineteenth century the debate centred around whether syphilis was a cause of GP.

In order to evaluate this particular topic and its corresponding page ranking4 I compared the results to existing historical research. In particular I looked into to the PhD thesis of Judith Hurn that was published in 1998.5 Her thesis investigated how GP affected the formation of the psychiatric profession in the United Kingdom. We got similar source suggestions and could observe the same tendencies. Her research validated the pipeline developed by Maria, but also demonstrated the added value of topic modelling.

First of all, TM allowed us to discover more relevant content. The table of content on the right for instance, would only point Hurn to the first article about syphilitic epilepsy. Applying TM to digitised journals directed us to other instances where this subject appears, including information in sub-sections of the journal. TM thus provides the researcher with actual content about the topic of interest, because the pipeline also picks up hidden and weak signals.

The system also shows the evolution of this particular subject over time. For instance, Hurn placed a lot of emphasis on French influences, but the sources compiled by me contradicted this bias to a certain extent. The sources demonstrate that information from Germany also reached Britain. Not only did the journal include reviews of German books, but the translations of German articles also indicate the importance British psychiatrists placed upon German research.

Exploring and processing large amounts of data is often easier if the data is visualised. Therefore, we also demonstrate the accomplishments of our Topic Modelling pipeline in a visualisation tool called Histograph. This visual interface developed by the CVCE Digital Humanities Lab improves navigating and exploring a big corpus. The photograph shows the “bucket of explorables”, a method of distant reading. For each topic, the size of the dots indicates their relative presence/importance throughout time with larger dots representing the most important topics. The tool also allows you to select a specific topic and filter the results. During the nineteenth century the debate centred around the role of syphilis in causing GP. By only selecting pages where the word paralysis and syphilis occur together, the researcher can close read only the pages the algorithm suggests to form an idea of this debate.

[masterslider id=”19″]

The use of the topic modelling pipeline allows the researcher to analyse a larger number of sources by only pointing to the sections of interest, especially for transnational research this approach could be useful. In future research we will fine tune our TM pipeline and the Histograph visualisations by allowing the integration of corpora in different languages and by integrating a feature that allows custom topic modelling. The new features give the researcher even more freedom in how particular information is extracted from the corpus, putting their thought and research processes at the forefront.

- This blog is based on a presentation delivered at DHBenelux 2019 in Liège on 12/09/2019. Credit is due to all people involved in this project: Maria Biryukov, Roman Kalyakin and Lars Wieneke.

- Topic modelling is an unsupervised machine learning technique that aims to group large amounts of documents into semantically meaningful clusters, such that documents assembled into one cluster would represent a topic. Topics are learned by the algorithm from the data (corpus, documents) , based on the word distribution.

- The TM pipeline involved (1) data pre-processing such as pdf to txt conversion and volume to page conversion. As well as maximizing the number of content-bearing pages via stop word lists and a threshold calculation. (2) the implementation of Non-negative Matrix Factorization (NMF) (Lee & Seung, 1999) to create the topics. (3) topic model coherence calculation via Word2Vec and via human evaluation of the number of suggested topics and page rankings.

- In page ranking the computer decides how relevant each page is to a certain topic based on the related words that can be found on a page.

- Hurn, J.D., The history of general paralysis of the insane in Britain, 1830 to 1950. Doctoral thesis, 1998, University of London.